John Surico relays some actual good news out of the Big Apple when it comes criminal justice:

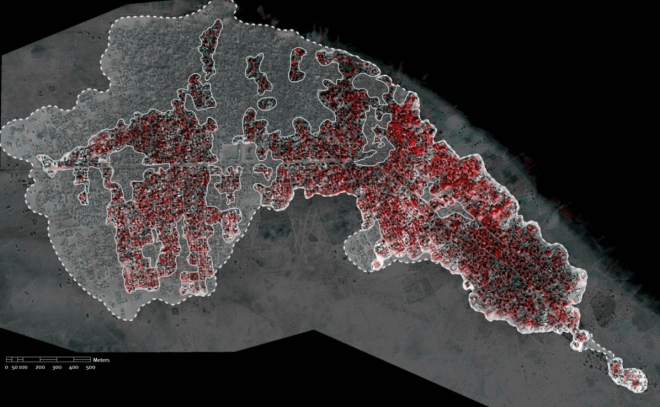

New York City’s Rikers Island, the second-largest jail in America, has been making headlines for the worst possible reasons lately. Between the ritualized beating of teenage inmates and horrendous treatment of mentally ill prisoners, it’s no wonder the feds are suing NYC over civil rights violations at the sprawling complex.

So it came as something of a surprise when, on Tuesday morning, New York City officials announced that, starting next year, Rikers Island will no longer hold anyone in solitary confinement under the age of 21, making it the first jail of its kind to do so in the country. Furthermore, no one—regardless of age—will be allowed to suffer in solitary for more than 30 consecutive days, and a separate housing unit will be established for those inmates most prone to violence.

Suffice it to say this is a big deal: Rikers Island just went from being one of the most dysfunctional jails in America to tentatively claiming a spot at the vanguard of the emerging criminal justice reform movement.

Major props to Mayor de Blasio, who last March appointed a new jails commissioner who promised to “end the culture of excessive solitary confinement.” But not all the news from Rikers was good this week:

A clean criminal record is not a requirement for a career as a Rikers correction officer, the Times reports. Got a gang affiliation and multiple arrests? No problem! Deep-seated psychological issues? Can’t hurt to apply! The Department of Investigation reviewed the Correction Department’s hiring process and discovered “profound dysfunction.” …

This administrative horrorshow does go a long way toward explaining why Rikers has found itself embroiled in so many awful situations, which include but are not limited to the inmate who was beaten and sodomized by a Correction Officer, the teen who died in solitary from a tear in his aorta, and the mentally ill man who died in solitary after guards declined to check on him.

Indeed, violence against prisoners has been at a record high:

Though the de Blasio administration promised reforms and a federal prosecutor’s report brought new attention to a “deep-seated culture of violence” at Rikers Island, in 2014 New York City correction officers set a record for the use of force against inmates. According to the Associated Press, guards reported using force 4,074 times last year, up from 3,285 times the previous year.

Back to the issue of solitary, it’s worth revisiting Jennifer Gonnerman’s great piece on Kalief Browder, who was sent to Rikers at 16 after being accused, but never convicted, of taking a backpack. He ended up spending three years there, most of it in solitary. A snapshot:

[Browder] recalls that he got sent [to solitary] initially after another fight. (Once an inmate is in solitary, further minor infractions can extend his stay.) When Browder first went to Rikers, his brother had advised him to get himself sent to solitary whenever he felt at risk from other inmates. “I told him, ‘When you get into a house and you don’t feel safe, do whatever you have to to get out,’ ” the brother said. “ ‘It’s better than coming home with a slice on your face.’ ”

Even in solitary, however, violence was a threat. Verbal spats with officers could escalate. At one point, Browder said, “I had words with a correction officer, and he told me he wanted to fight. That was his way of handling it.” He’d already seen the officer challenge other inmates to fights in the shower, where there are no surveillance cameras.

Browder later tried to commit suicide by slitting his wrists with shards of a broken bucket. Also from Gonnerman’s piece:

Between 2007 and mid-2013, the total number of solitary-confinement beds on Rikers increased by more than sixty per cent, and a report last fall found that nearly twenty-seven per cent of the adolescent inmates were in solitary. “I think the department became severely addicted to solitary confinement,” Daniel Selling, who served as the executive director of mental health for New York City’s jails, told me in April; he had quit his job two weeks earlier. “It’s a way to control an environment that feels out of control—lock people in their cell,” he said. “Adolescents can’t handle it. Nobody could handle that.”

Another story of a teen in solitary is seen in the above video. Previous Dish on the inhumane practice here.